Introduction: Why Enterprises Are Moving Toward Private LLMs

As generative AI moves from experimentation to mission-critical adoption, enterprises are increasingly realizing that public, shared large language models are not enough for high-stakes business use cases.

Concerns around data privacy, IP protection, regulatory compliance, model transparency, and long-term control are pushing organizations to explore private LLM deployment models—architectures that give enterprises ownership, isolation, and governance over their AI systems.

Private LLMs are not about rejecting innovation; they are about deploying GenAI responsibly, securely, and at enterprise scale.

What Is a Private LLM Deployment?

A private LLM deployment refers to an architecture where:

- The model runs in a dedicated enterprise environment

- Enterprise data is never shared with public model training

- Access is restricted to authorized users and systems

- Governance, security, and customization are fully controlled

Private deployments allow organizations to leverage generative AI without compromising trust or compliance.

Why Public LLMs Fall Short for Enterprise Use

Public GenAI platforms are powerful but introduce limitations:

- Limited visibility into training data and model behavior

- Risk of sensitive data exposure through prompts or outputs

- Inflexible governance and auditability

- Regulatory and jurisdictional concerns

- Vendor lock-in risks

For regulated industries and IP-driven enterprises, these limitations can block production deployment.

Core Private LLM Deployment Models

1. On-Premises Private LLM Deployment

In this model, LLMs are hosted entirely within the enterprise’s own data centers.

Best suited for:

- Highly regulated industries

- Organizations with strict data residency rules

- Enterprises with existing AI infrastructure

Advantages:

- Maximum data control and isolation

- Full compliance with internal security policies

- Zero external data exposure

Challenges:

- High infrastructure and maintenance costs

- Slower scalability

- Requires strong internal AI and MLOps expertise

2. Private Cloud-Based LLM Deployment

Here, LLMs are deployed in a dedicated cloud environment (VPC or isolated tenant).

Best suited for:

- Enterprises seeking scalability with strong security

- Hybrid or cloud-first organizations

Advantages:

- Elastic scaling and performance

- Strong isolation from public workloads

- Easier model upgrades and management

Challenges:

- Requires careful cloud security architecture

- Dependency on cloud provider capabilities

This model balances control and agility, making it one of the most popular enterprise choices.

3. Hybrid LLM Deployment Model

Hybrid models combine:

- On-premise data storage

- Cloud-based inference or fine-tuning

Best suited for:

- Enterprises modernizing legacy systems

- Organizations transitioning to cloud gradually

Advantages:

- Sensitive data stays on-premise

- Compute-heavy workloads leverage cloud scalability

- Flexible compliance strategy

Challenges:

- Complex integration and orchestration

- Requires strong data governance frameworks

4. Vendor-Hosted Dedicated LLM Instances

Some providers offer single-tenant, dedicated LLM instances hosted and managed by the vendor.

Best suited for:

- Enterprises needing faster time-to-value

- Organizations lacking internal AI operations teams

Advantages:

- Reduced operational overhead

- Enterprise-grade SLAs

- Custom security and compliance controls

Challenges:

- Lower customization than fully private deployments

- Potential long-term cost considerations

Key Architecture Components of Private LLM Deployments

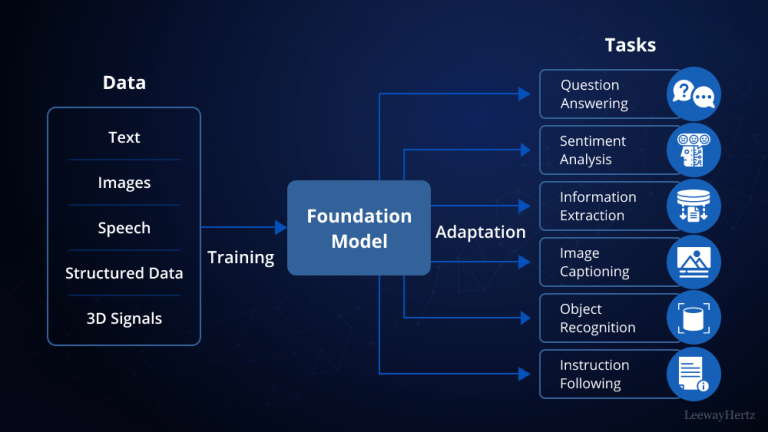

Model Selection and Customization

Enterprises must decide:

- Open-source vs proprietary models

- Base model size and architecture

- Fine-tuning vs retrieval-augmented generation (RAG)

Customization enables domain-specific accuracy and relevance.

Data Layer and Knowledge Integration

Private LLMs typically integrate with:

- Internal knowledge bases

- Enterprise document repositories

- Structured and unstructured data sources

Strong data pipelines ensure accurate, context-aware outputs without data leakage.

Security and Governance Controls

Private LLM deployments must embed:

- Role-based access control

- Prompt and output monitoring

- Audit logs and traceability

- Policy enforcement mechanisms

Governance ensures trust, compliance, and explainability.

Operationalizing Private LLMs at Scale

MLOps and Model Lifecycle Management

Enterprise success depends on:

- Version control for models

- Continuous performance monitoring

- Model retraining and rollback strategies

- Cost and usage optimization

Without MLOps, private LLMs become hard to maintain and scale.

Performance Optimization and Cost Management

Private LLMs can be resource-intensive.

Best practices include:

- Model quantization and optimization

- Inference caching

- Workload prioritization

- Usage-based cost controls

These ensure predictable performance and ROI.

Compliance and Regulatory Readiness

Private LLM deployments simplify compliance with:

- GDPR and data sovereignty laws

- Industry regulations like HIPAA and PCI DSS

- Internal audit and risk frameworks

Enterprises gain documented, auditable AI systems.

Choosing the Right Private LLM Strategy

There is no one-size-fits-all model.

Enterprises should evaluate:

- Data sensitivity and regulatory exposure

- Scale and performance requirements

- Internal AI maturity

- Long-term GenAI roadmap

A phased approach often delivers the best results.

Role of Enterprise GenAI Partners

Experienced GenAI partners help enterprises:

- Select optimal deployment models

- Design secure architectures

- Implement RAG and fine-tuning strategies

- Establish governance and MLOps frameworks

- Scale GenAI across business units

This reduces risk and accelerates enterprise adoption.

Private LLMs as the Foundation of Enterprise GenAI

Private LLM deployment models empower enterprises to:

- Protect data and IP

- Meet compliance requirements

- Customize AI for business value

- Build long-term AI capabilities

They transform generative AI from a tool into a strategic enterprise asset.

FAQs

1. Are private LLMs always more expensive than public models?

Not necessarily. While upfront costs may be higher, private LLMs often deliver better long-term ROI through security, control, and customization.

2. Can enterprises use multiple LLMs in a private setup?

Yes. Many enterprises adopt multi-model strategies to balance cost, performance, and specialization.

3. How long does it take to deploy a private LLM?

Timelines vary, but with the right architecture and partner, initial deployments can be achieved in weeks—not months.